Anyone who has Nutanix lab blocks that need to be started / stopped frequently may appreciate these scripts.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 |

#Nutanix Startup Script #This script leverages The SSH.NET powershell module from http://www.powershelladmin.com/wiki/SSH_from_PowerShell_using_the_SSH.NET_library #Download it at: http://www.powershelladmin.com/w/images/a/a5/SSH-SessionsPSv3.zip Import-Module SSH-Sessions #This script records the time to collect metrics on how long it takes to start up the different elements $scriptStart = (Get-Date) #Define all the IP addresses in your cluster. $cvmIPs = "10.0.0.71", "10.0.0.72", "10.0.0.73" $ipmiIPs = "10.0.0.81", "10.0.0.82", "10.0.0.83" $hostIPs = "10.0.0.91", "10.0.0.92", "10.0.0.93" #Install ipmiutil in a direcrory that is included in the PATH variable or run the script in the same directory #I installed ipmiutil in c:\windows\system32\ #Use ipmiutil to power on the hosts foreach ($ipmiIP in $ipmiIPs) {ipmiutil reset -u -N $ipmiIP -U ADMIN -P ADMIN} write-host "Starting Hosts" #Ping the hosts until they are online $ping = new-object System.Net.Networkinformation.Ping foreach ($hostIP in $hostIPs) { do{$result = $ping.send("$hostIP");write-host "." -NoNewline; sleep 1} Until($result.status -eq "Success") write-host "$hostIP ONLINE" } #Log the startup time for the ESXi hosts $hostsStarted = (Get-Date) #Wait until CVMs are booted foreach ($cvmIP in $cvmIPs) { do{$result = $ping.send("$cvmIP");write-host "." -NoNewline; sleep 1} Until($result.status -eq "Success") write-host "$cvmIP ONLINE" } #Log the startup time for the CVMs $cvmStarted = (Get-Date) sleep 10 #Log into a CVM and do a cluster start New-SshSession -ComputerName $cvmIP -Username 'nutanix' -Password 'nutanix/4u' $result = Invoke-SshCommand -ComputerName $cvmIP -Command '/home/nutanix/cluster/bin/cluster status | grep state' If ($result -eq 'The state of the cluster: stop') {Invoke-SshCommand -ComputerName $cvmIP -Command '/home/nutanix/cluster/bin/cluster start'} sleep 5 Remove-SshSession -RemoveAll #Log the end time $scriptEnd = (Get-Date) #Calculate the timespans $hostStartTime = New-Timespan -Start $ScriptStart -End $hostsStarted $cvmStartTime = New-Timespan -Start $hostsStarted -End $cvmStarted $storageStartTime = New-Timespan -Start $cvmStarted -End $scriptEnd $totalTime = New-Timespan -Start $scriptStart -End $scriptEnd #Write a time summary write-host "ESXi Boot Time" write-host $hostStartTime write-host "CVM Startup Time" write-host $cvmStartTime write-host "Storage Startup Time" write-host $storageStartTime write-host "Total Script Time" write-host $totalTime |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

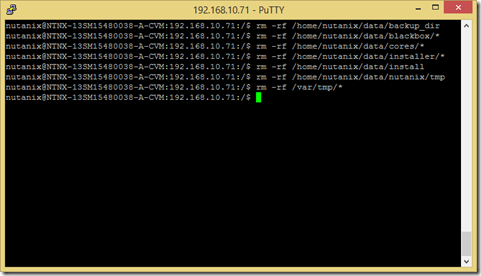

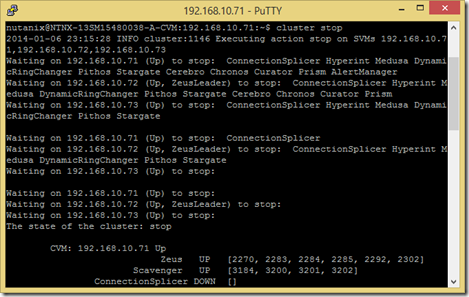

#Nutanix Shutdown Script #Add all of the IPs to arrays $cvmIPs = "10.0.0.71", "10.0.0.72", "10.0.0.73" $ipmiIPs = "10.0.0.81", "10.0.0.82", "10.0.0.83" $hostIPs = "10.0.0.91", "10.0.0.92", "10.0.0.93" #Get script start time so we can see how long it takes for the scripts to run $scriptStart = (Get-Date) #This script leverages The SSH.NET powershell module from http://www.powershelladmin.com/wiki/SSH_from_PowerShell_using_the_SSH.NET_library #Download it at: http://www.powershelladmin.com/w/images/a/a5/SSH-SessionsPSv3.zip New-SshSession -ComputerName $cvmIPs[0] -Username 'nutanix' -Password 'nutanix/4u' $result = Invoke-SshCommand -ComputerName $cvmIPs[0] -Command '/home/nutanix/cluster/bin/cluster status | grep state' If ($result -eq 'The state of the cluster: start') {Invoke-SshCommand -ComputerName $cvmIPs[0] -Command '/home/nutanix/cluster/bin/cluster stop'} sleep 10 $result = Invoke-SshCommand -ComputerName $cvmIPs[0] -Command '/home/nutanix/cluster/bin/cluster status | grep state' Remove-SshSession -RemoveAll If ($result -eq 'The state of the cluster: stop') { foreach ($cvmIP in $cvmIPs) {New-SshSession -ComputerName $cvmIP -Username 'nutanix' -Password 'nutanix/4u';Invoke-SshCommand -ComputerName $cvmIP -Command 'sudo /sbin/shutdown -h 0'} } Remove-SshSession -RemoveAll #Ping the CVMs until they are shutdown $ping = new-object System.Net.Networkinformation.Ping write-host "Shutting down CVMs" foreach ($cvmIP in $cvmIPs) {do{$pingResult = $ping.send($cvmIP);write-host "." -NoNewline; sleep 1} Until($pingResult.status -ne "Success")} #Shutdown the hosts write-host "Shutting down hosts" foreach ($ipmiIP in $ipmiIPs) {If ($result -eq 'The state of the cluster: stop') {ipmiutil reset -D -N $ipmiIP -U ADMIN -P ADMIN}} foreach ($hostIP in $hostIPs) {do{$pingResult = $ping.send($hostIP);write-host "." -NoNewline; sleep 1} Until($pingResult.status -ne "Success")} #Print how long the script took to run $scriptEnd = (Get-Date) $runTime = New-Timespan -Start $scriptStart -End $scriptEnd write-host `n"Script Run Time:" `n $runtime |