My 1350 lab block came with Nutanix OS 3.1.3. A block refers to a 2U chassis with 4 nodes (or in my case 3, as that is the minimum number of nodes required to create a storage cluster) and Nutanix OS refers to the abstracted virtual storage controller and not the bare metal hypervisor.

Below is a node that I have removed that is sitting on top of the chassis.

The bare metal server node is currently running VMware ESXi 5.0 and the Nutanix OS runs as a virtual machine. All of the physical disks are presented to this VM through the use of Direct PassThru.

The latest version of Nutanix OS is 3.5.2.1, so I want to run through the upgrade procedure.

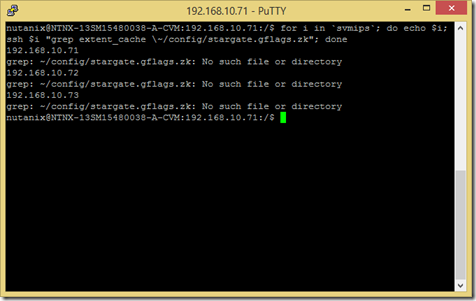

- Log onto a Controller VM (CVM – another name for Storage Controller or Nutanix OS VM). Run the following command to check for the extent_cache parameter.

for i in

svmips; do echo $i; ssh $i "grep extent_cache\~/config/stargate.gflags.zk"; doneIf anything is returned other than No such file or directory, or a parameter match is returned, the upgrade guide asks you to contact Nutanix support to remove this setting.

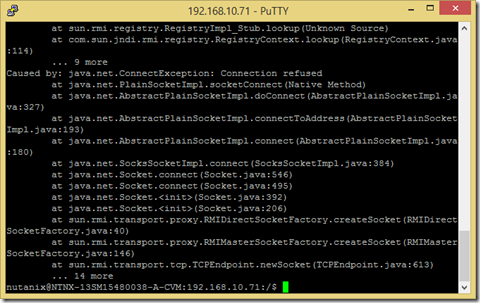

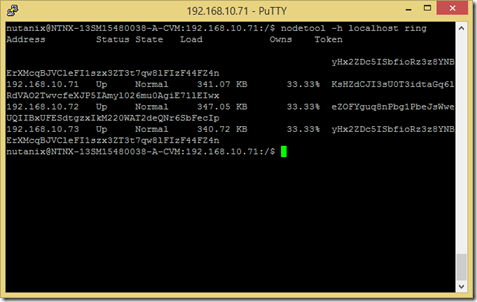

- We need to confirm that all hosts are part of the metadata store with the following command:

nodetool –h localhost ring

Hmm… Running that command seems to have returned a haven of errors. Maybe my cluster needs to be running for this command to work? Let’s try “cluster start” and try this again.

Ok, that looks more like what I’m expecting to see!

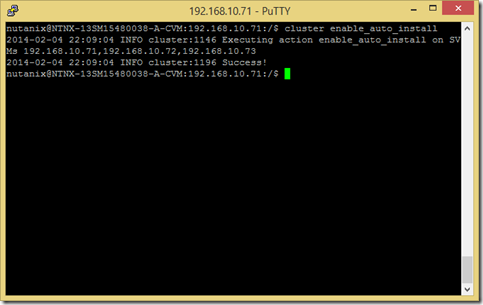

- I’m skipping the steps in the guide that say to check the hypervisor IP and password since I know they’re still at factory default. Now I need to enable automatic installation of the upgrade.

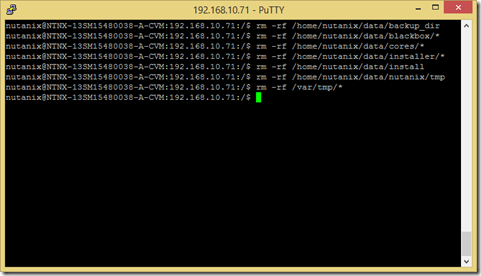

- Log onto each CVM and remove core, blackbox, installer and temporary files using the following commands:

rm –rf /home/nutanix/data/backup_dir

rm –rf /home/nutanix/data/blackbox/*

rm –rf /home/nutanix/data/cores/*

rm –rf /home/nutanix/data/installer/*

rm –rf /home/nutanix/data/install

rm –rf /home/nutanix/data/nutanix/tmp

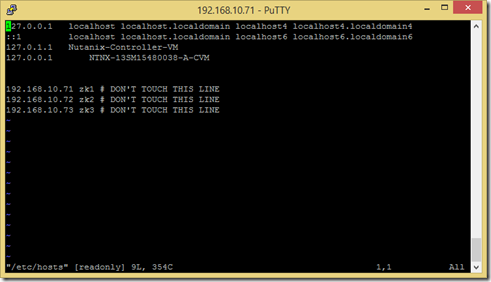

rm –rf /var/tmp/* - The guide says to check the CVM hostname in /etc/hosts and /etc/sysconfig/network to see if there are any spaces. If we find any we need to replace them with dashes.

No dashes here!

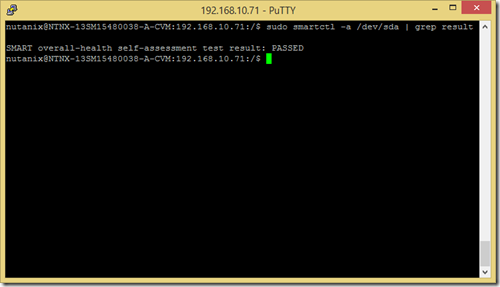

- On each CVM, check that there are no errors with the controller boot drive with the following command:

sudo smartctl –a /dev/sda | grep result

- If I had replication, I would need to stop it before powering off my CVMs. However, since this is a brand new block, it’s highly unlikely that I have it set up.

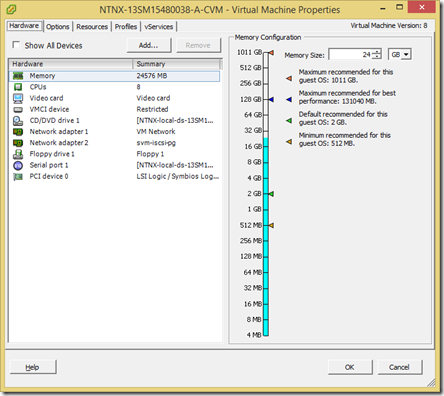

- Edit the settings for the CVM and allocate 16GB of RAM, or 24 GB of RAM if you want to enable deduplication. In production, this requires shutting down the CVMs one at a time, changing the setting, then powering the CVM back up, waiting to confirm that it is back up and part of the cluster again, and then shutting down the next CVM to modify it. However, since there are no production VMs running in the lab I can just stop the cluster services, shutdown all of the CVMs, make the change, and then power them all back on.

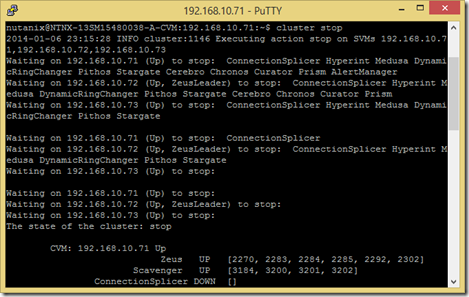

To stop cluster services on all CVMs that are part of a storage cluster log onto the CVM and use the command:

cluster stop

We can confirm that cluster services are stopped by running the command:

cluster status | grep state

We should see the output: The state of the cluster: stop.

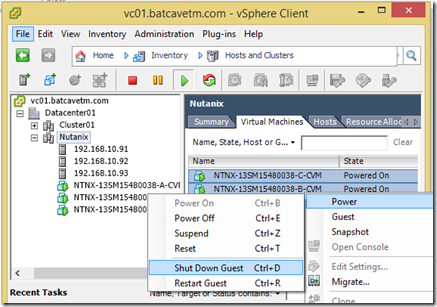

We can now use the vSphere client, vSphere Web Client, PowerCLI, or whatever floats your boat to power off the CVMs and make the RAM changes.

- Power the CVMs back on, grab a tasty beverage of your choice, then check to see if all of the cluster services have started using: cluster status | grep state. The state of the cluster should be “start”.

- Next we need to disable email alerts:

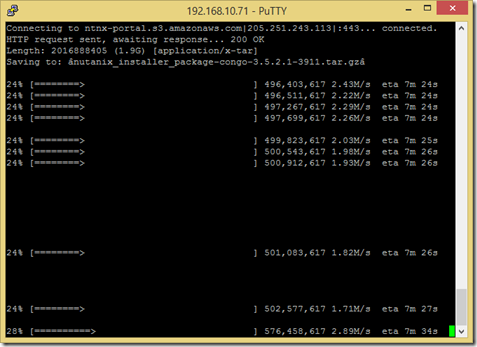

ncli cluster stop-email-alerts - Upload the Nutanix OS release to /home/nutanix on the CVM. Or if you’re lazy like me just copy the link from the Nutanix support portal and use wget.

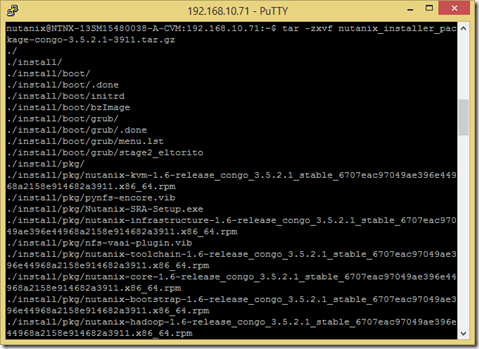

- Expand the tar file:

tar –zxvf nutanix_installer*-3.5.2.1-* (or if you’re lazy tab completion works as well)

- Start the upgrade

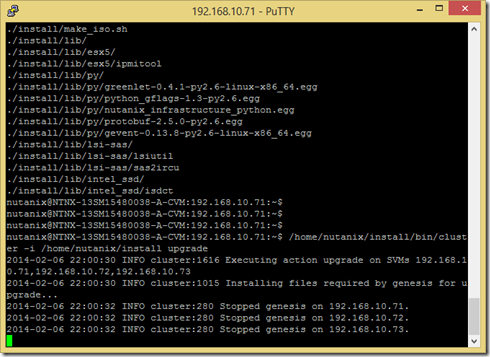

/home/nutanix/install/bin/cluster –i /home/nutanix/install upgradeHere we go!

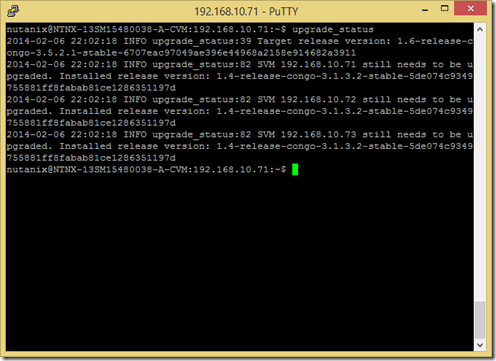

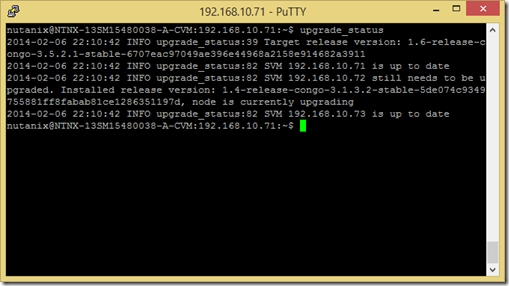

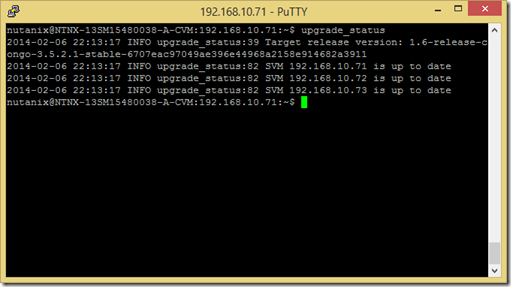

- You can check the status of the upgrade with the command upgrade_status.

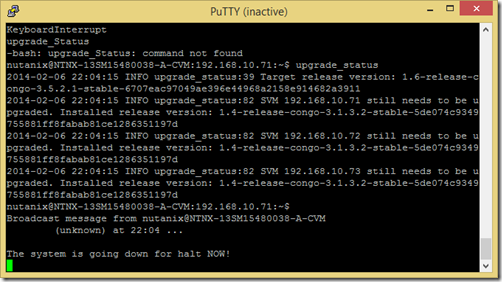

You’ll know the upgrade is progressing when the CVM that you’re logged into decides to reboot.

8 minutes later… One down two to go!

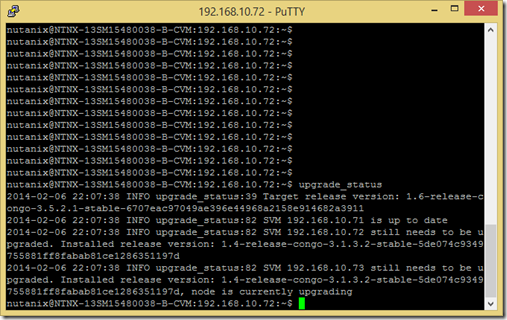

11 minutes in…

13 minutes later… up to date!

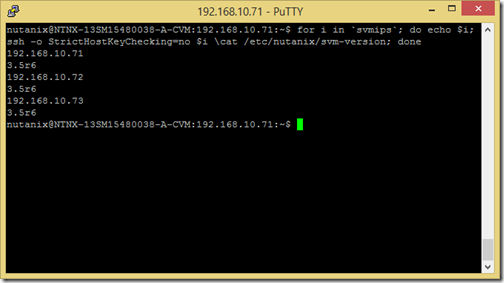

- Confirm that the controllers have been upgraded to 3.5 with the following command:

for i in

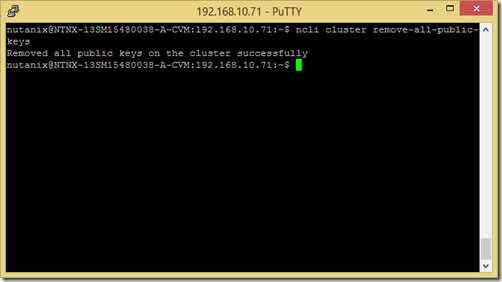

svmips; do echo $i; ssh -o StrictHostKeyChecking=no $i \cat /etc/nutanix/svm-version; done - Remove all previous public keys:

ncli cluster remove-all-public-keys

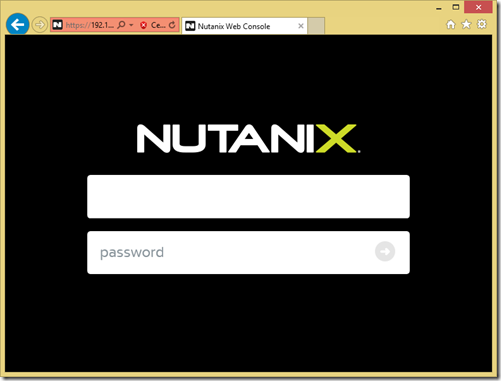

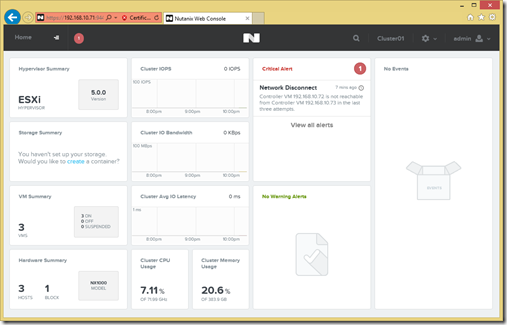

- Sign in to the web console:

Behold the PRISM UI!