Nutanix is running a marketing campaign #NixVblock. As part of the marketing campaign they had a video that I really can’t describe better than the way Sean Massey put it:

“VBlock is supposed to be an uninteresting, high maintenance woman who hears three voices in her head and dresses like three separate people.

The “VBlock” character is supposed to represent the negatives of the competing VCE vBlock product. Instead, it comes off as the negative stereotype of a crazy ex that has been cranked past 11 into offensive territory.”

While I was not personally offended by the video, it was inappropriate, and I was very disappointed. It had the feeling of the inside joke that you tell someone else who isn’t involved and then you come off as an insensitive jerk. You didn’t mean to be an insensitive jerk, you just wanted to let your new friend in on the joke too. When you turn that joke into a marketing video for your company, comparing your competitor to a crazy date and broadcast it to the world in an official marketing campaign, that is sexist and immature. Would VCE put out a video like that? The immaturity of the video just makes Nutanix come out looking like the underdog that they are… nipping at the heels of VMware, Cisco and EMC.

Since Nutanix is still a startup, perhaps they still have interns running the marketing department? I really only need to ask the marketing department one question that should illustrate why I am upset that they chose such an immature method to attempt to communicate their product’s technical superiority to vBlock (which that video doesn’t even attempt to address). Who is the intended audience of that video? Is it customers that haven’t purchased Nutanix before but are also considering VCE? Consider that some of my US Federal customers have many organizations run by women. Is that video something that I should point them to that will make them choose Nutanix over VCE? Is that video going to help convince them that Nutanix is actually the more mature feature rich product?

I have actually experienced trying to procure VCE for a project. VCE is actually a separate company that resells VMware, Cisco and EMC in one package. They market that the value add is that their support is qualified in all three products and won’t redirect you to VMware, Cisco or EMC. But in reality this only helps tier 1 sys admins. If you forget to check a box, VCE will help you, but if you encounter anything that is a serious bug in one of the technologies, you are going to get redirected to the source. Also when I tried to procure VCE it came out as SIGNIFICANTLY more expensive than just buying the components separately and putting them together myself… I guess that VCE SME has to eat to?! Imagine that… putting in a middle man costs more money rather than less… Who would have thought it?!

Another disadvantage you have with VCE is that you lose the ability to compete the internal components. For example I lose the ability to compete VMware with Citrix, Cisco with Brocade or Arista, and EMC with Netapp, which lowers costs for my customers. I also had the requirement to have US citizen on US soil support which at the time the VCE rep couldn’t answer if they had or not… IE I was going to get redirected to the component supplier when I called support anyway. In the end, I just bought the separate VMware, Cisco, and EMC components and bolted them together myself.

Of course that was long before Nutanix. Which brings be to the title of this post. All Nutanix really had to do was highlight the features that Nutanix has that vBlock doesn’t have. Let’s compare.

| Nutanix | vBlock |

| Built-in VM aware Disaster Recovery integrated into GUI with N:Many replication | Not Built in. Can buy RecoverPoint for Block replication and MirrorView for file replication. Not VM aware unless you’re talking about vSphere replication, but that’s not really storage-level replication |

| VM aware storage snapshots | Block or File level snapshots |

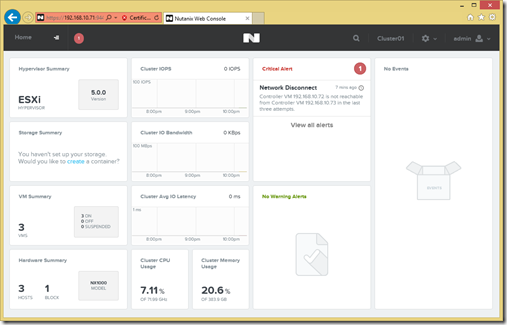

| Simple web based GUI interface | Cluttered Java interface that I can only get to when I alter security policies to allow some version of java 5 releases old. |

| Storage Controller on every node | 2 Storage Controllers |

| Infinitely scalable | Forklift upgrade |

| Shared nothing architecture | Shared Everything Architecture |

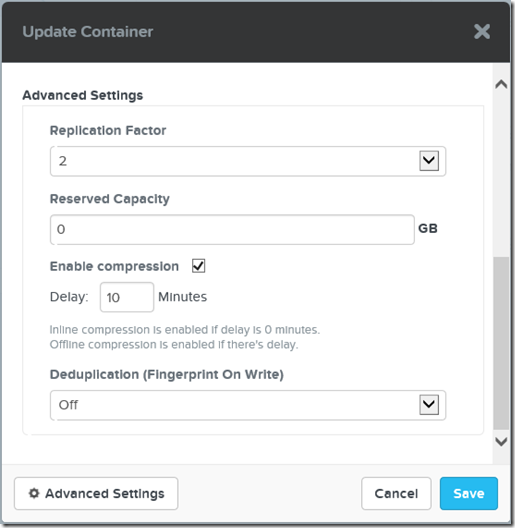

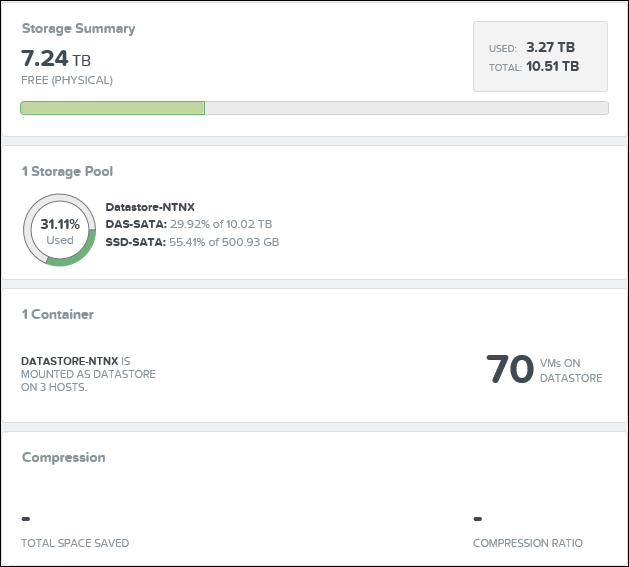

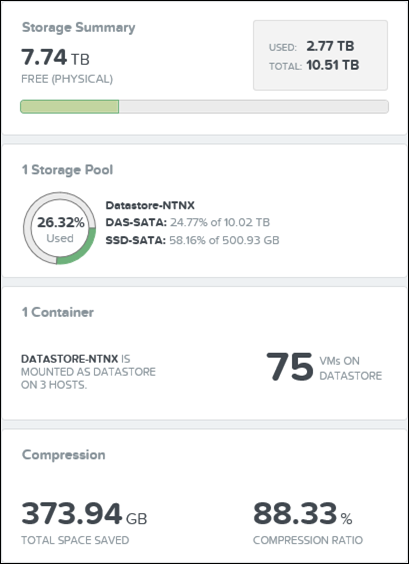

| Built in Compression / Deduplication | Why would you compress / dedupe? How would VCE make you buy more disks? |

| Shadow Clones | Nothing like shadow clones. |

| Built in storage analytics that detail IO by disk, VM and node | Not Built in. You can buy the EMC Storage Analytics plug-in for vCOPS for $20K. |

| Prism Central management interface can span multiple clusters | You can argue that Unisphere can do this too, but is still in Java and sucks. |

I could sit here for an hour adding to this list, but I think I’ve made my point.

Nutanix, please don’t fire anyone for failing with that video. We can forgive you, and you need to allow people to make mistakes, learn and grow from them, but going forward please stick to marketing your strengths. You don’t need to put anyone down, what you are doing stands out for itself. Take the high road and you’ll win more friends. I also get that may have grown out of an inside joke and sometimes it is hard to see any potential complications from the inside, but you have enough money to get an external PR agency for future marketing campaign analysis.