The network between my desk and our lab is controlled by corporate IT. In order to have access to lab equipment at my desk I had to set up a simple PPTP VPN running on a Windows Server 2008 R2 VM. Needless to say, it doesn’t provide the best throughput, but it meets about 95% of my needs.

Enter View 4.6 PCoIP Secure Gateway. Since View Secure Gateway proxies the PCoIP connection I was absolved of the need for the VPN…

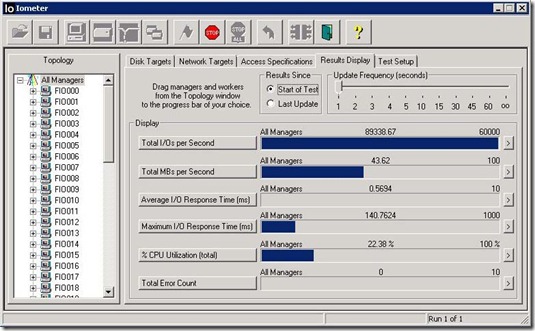

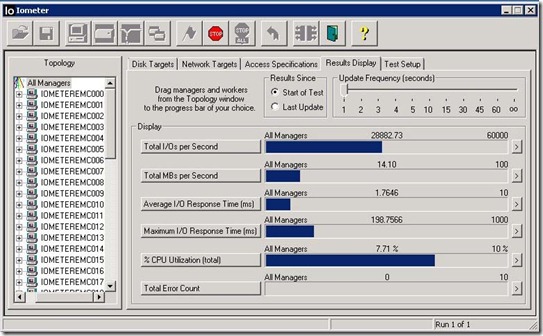

However, like any good engineer, once I had some free cycles I decided to test what the maximum video performance of View could be. I fired up Band of Brothers Episode Two and watched at an amazing 5 frames per second. I logged onto my Wyse P20 and saw that the “Active Bandwidth Limit” was set to 7000Kb.

Nothing that I tried seemed to improve the performance until my local VMware Sales Engineer stopped by and I showed the video performance to him. He gave me some suggestions on how to improve the performance, but mentioned that it looked like the video was being rate limited. This set off a spark in my head and after a couple of hours googling for “VMware View rate limit” I stumbled upon the answer… provided by our friends at EVGA.

Turns out that when you enable a Secure Gateway Server you are limited to using AES-128-GCM encryption and 7000Kb… which makes sense for using View over the WAN. When I unchecked the box “Use PCoIP Secure Gateway for PCoIP connections to the desktop” in the General tab of View Connection Server Settings I was able to obtain much better video performance… up to 24 frames per second with the zero client resolution set to 1280×1024.

When I turned up the resolution to the full 1900×1200 of my monitor I was back to 5 frames per second. It seems that the SALSA256-Round12 encryption is limited to 20 Mb/s. Perhaps this will get better in View 5.0?